Configuring and deploying the connector

This page describes how to configure the connector for log retrieval.

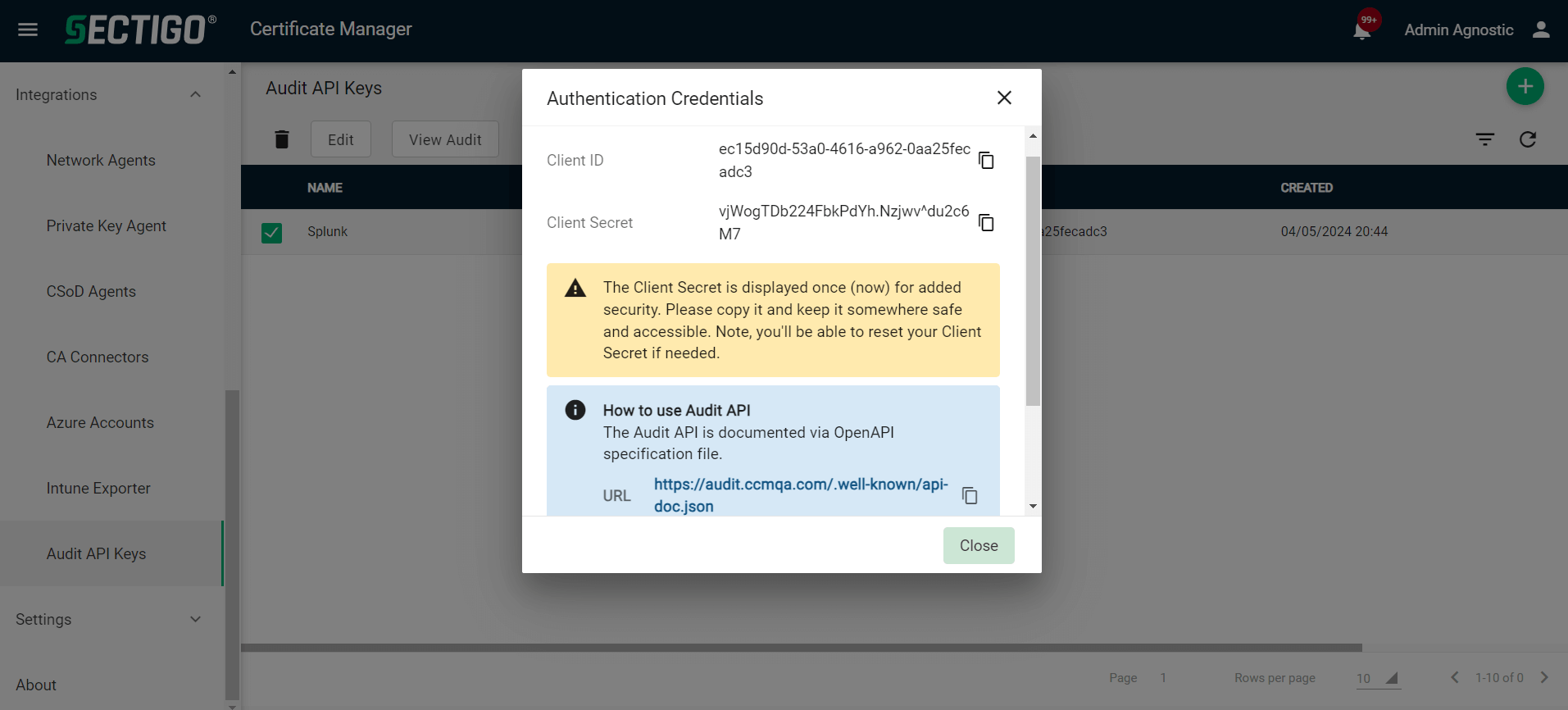

Obtain the SCM Audit API key

-

Log in to SCM at

https://cert-manager.com/customer/<customer_uri>with the MRAO administrator credentials provided to your organization.Sectigo runs multiple instances of SCM. The main instance of SCM is accessible at

https://cert-manager.com. If your account is on a different instance, adjust the URL accordingly. -

Select .

-

Click Add to create an Audit API key.

-

Give a name to your key, then click Save.

-

Make a note of the URL, Client ID, and Client Secret values.

You will need them to configure Datadog.

Package contents

The exact process for deploying the connector depends on your infrastructure and deployment workflow.

The package contains the following files:

-

sectigo-audit-datadog- the connector, an executable binary that performs audit log synchronization. -

.env- The configuration template file for you to customize. -

Dockerfile- An optional Dockerfile to build the image that can run the connector. -

docker-compose.yaml- An example docker-compose file to run the connector with a redis storage backend. -

README.md- useful instructions regarding the configuration and running. -

integration EULA v1.0.pdf- the end-user license agreement.

Configure the connector

Configure the connector by customizing the .env file, referring to the following tables of parameters.

DD_SITE=datadoghq.eu

DD_API_KEY=75f3fbe2a8e20cb3e8ae8b188bcb5077

SECTIGO_AUDIT_API_URL=https://audit.enterprise.sectigo.com

SECTIGO_AUDIT_API_CLIENT_ID=a40854c0-279c-4187-90ff-bd9fb9939892

SECTIGO_AUDIT_API_CLIENT_SECRET==m0sfj!RQZNp]d2C8DIf1qM8V6jjnAEy

SECTIGO_AUDIT_STORAGE_BACKEND=localfs

SECTIGO_AUDIT_LOCAL_STORAGE_PATH=/state/Datadog configuration

| Parameter | Description | Default | Required |

|---|---|---|---|

|

Datadog site to send logs to (datadoghq.com, datadoghq.eu, etc.) |

datadoghq.com |

No |

|

API key for authenticating with Datadog |

Yes |

|

|

Tags to associate with logs (format: |

No |

|

|

Hostname to associate with logs |

Local machine hostname |

No |

Sectigo audit API configuration

| Parameter | Description | Default | Required |

|---|---|---|---|

|

Sectigo Audit API endpoint URL |

Yes |

|

|

Client ID for API authentication |

Yes |

|

|

Client secret for API authentication |

Yes |

Sync configuration

| Parameter | Description | Default | Required |

|---|---|---|---|

|

Log output format ( |

|

No |

|

Minimum log level ( |

|

No |

|

Whether to run continuously ( |

|

No |

|

Interval between sync operations (e.g., |

|

No |

|

How far back to fetch logs on first sync (max: |

No |

|

|

Backend for storing sync state ( |

|

No |

For recurring environments like AWS Lambda with cron, you might want to set SECTIGO_AUDIT_SYNC_LOOP=false to perform a single sync operation.

The sync interval accepts values from 5s to 18h.

Values closer to 18 hours increase the risk of missing logs as

Datadog drops logs older than 18 hours.

|

Storage backend—local file system

| Parameter | Description | Default | Required |

|---|---|---|---|

|

Path for storing state |

Current working directory |

No |

Storage backend—Redis

| Parameter | Description | Default | Required |

|---|---|---|---|

|

Redis server address (host:port) |

Yes (for Redis) |

|

|

Redis server password |

No |

Storage backend—AWS S3

| Parameter | Description | Default | Required |

|---|---|---|---|

|

S3 bucket name |

Yes (for S3) |

|

|

Path prefix within bucket |

No |

Make sure the associated IAM role has the following permissions on the S3 bucket:

-

s3:HeadBucket -

s3:GetObject -

s3:PutObject

|

If the connector is running in an AWS environment, it will automatically use the AWS IAM role for authentication. No additional configuration is needed. If the connector is running outside AWS but you still want to use S3 as a storage backend, make sure the environment has default AWS credentials. For more information, see Authentication and access using AWS SDK. |

Storage backend—Google cloud storage

| Parameter | Description | Default | Required |

|---|---|---|---|

|

GCS bucket name |

Yes (for GCS) |

|

|

Path prefix within bucket |

No |

Make sure the associated service account has the following permissions on the GCS bucket (Storage Object Admin covers all the above permissions):

-

storage.buckets.get -

storage.objects.create -

storage.objects.get

|

If the connector is running in a GCP environment, it will automatically use the instance’s IAM role for authentication. No additional configuration is needed. If the connector is running outside GCP but you still want to use GCS as a storage backend, make sure the environment has GCP Application Default Credentials. For more information, see Set up application default credentials. |